4D Radar SLAM

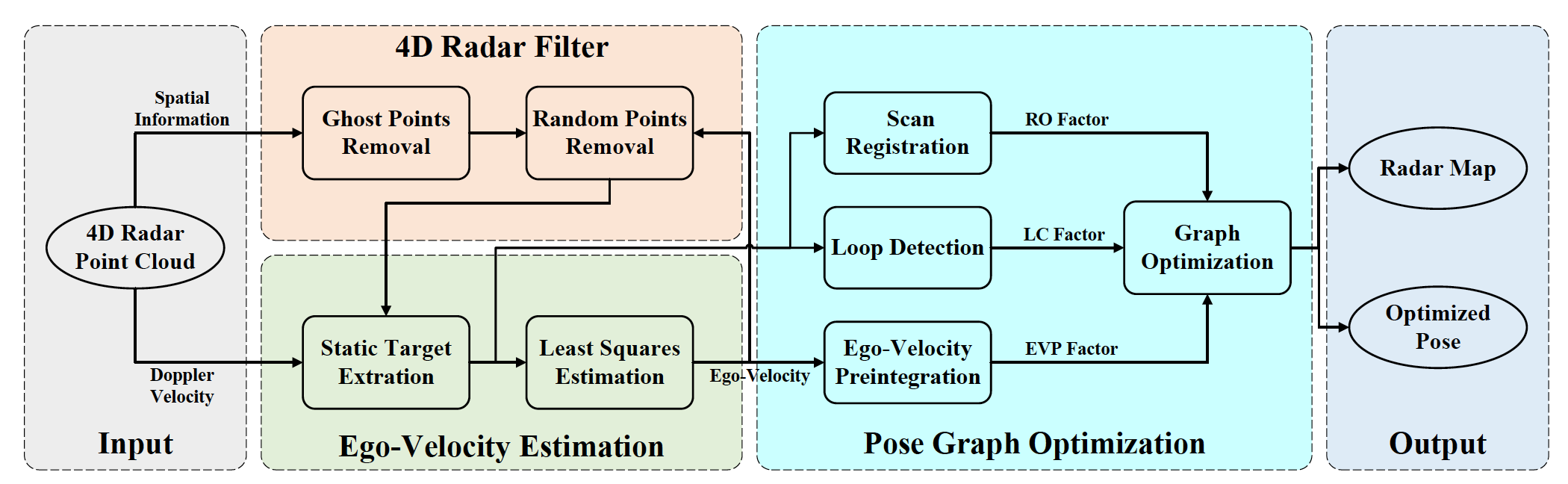

We develop SLAM algorithm based on 4D radar data

This project is supported by ZF (China) Investment Co., Ltd. (the legal entity of ZF Friedrichshafen AG in China).

If you are interested in the 4D radar SLAM data set, you can go Software & Data sets to have a look.

(See “Xingyi Li, Han Zhang, Weidong Chen. 4D Radar-Based Pose Graph SLAM With Ego-Velocity Pre-Integration Factor, IEEE Robotics and Automation Letters, Vol. 8, Iss. 8, 5124 - 5131, August 2023” for more details.)

Millimeter wave (mmWave) radars have been widely exploited for Simultaneous Localization and Mapping (SLAM), especially in autonomous driving. Compared to cameras or LiDARs, mmWave radars are robust to adverse weather and light conditions, ensuring consistent reliability in diverse environments. Moreover, mmWave radars are more affordable, making them popular for integration in autonomous vehicles.

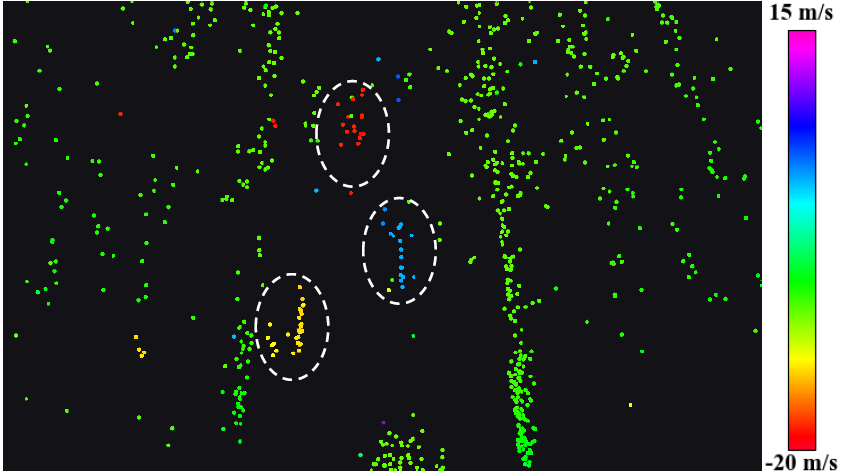

Conventional radars can be categorized into two species: scanning radars and automotive radars [8]. In particular, scanning radars scan the environment in 360 degrees, providing 2D planar images without velocity information. While automotive radars have a smaller field of view (FoV) and offer sparse planar point clouds with relative radial Doppler velocity. Compared to conventional radars, 4D radars have a similar FoV to automotive radars, but provide a denser 3D point cloud with richer information, i.e., range, azimuth, elevation and Doppler velocity. With additional information and increased resolution, 4D radars open up new opportunities for SLAM applications.

Due to the sparsity and noisy measurements of 4D radar point clouds, SLAM methods for LiDAR would achieve a very poor performance if directly used. In addition, due to the difference in data characteristics, conventional radar SLAM systems are no longer suitable for 4D radars. Therefore, we develop an accurate and robust SLAM framework for 4D radars.

We have tested our 4D radar SLAM algorithm in an industrial park and our campus under multiple scenarios. The results are shown in the video below.