We present 5 datasets of legged-wheel robot containing LiDAR data, IMU data, joint sensors data and ground truth. These datasets cover different challenging scenes. To the best of our knowledge, there are limited public datasets collected from legged-wheel robots. We hope our datasets enable the development of legged-wheel robot SLAM in the community.

Our datasets are now available:

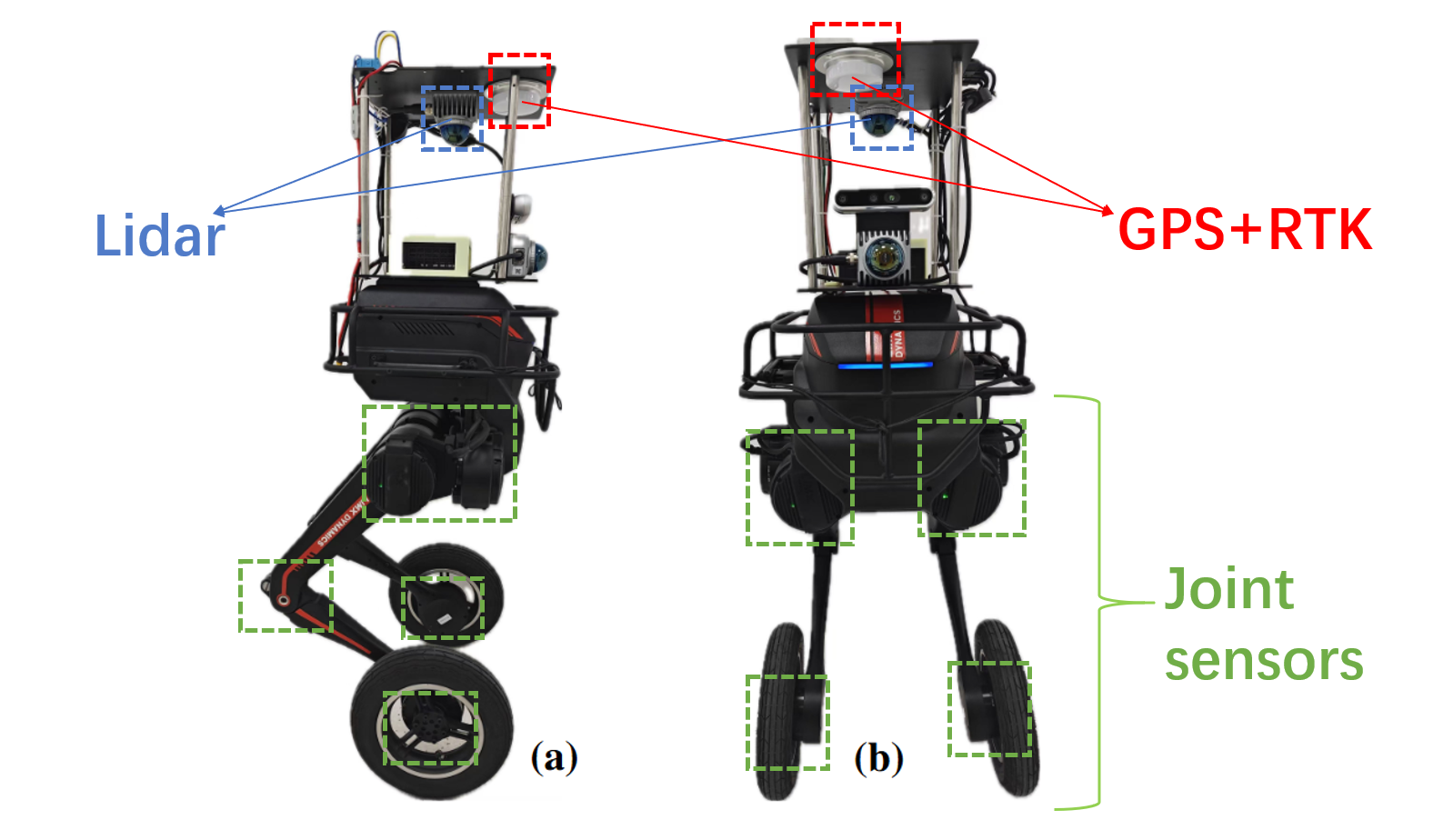

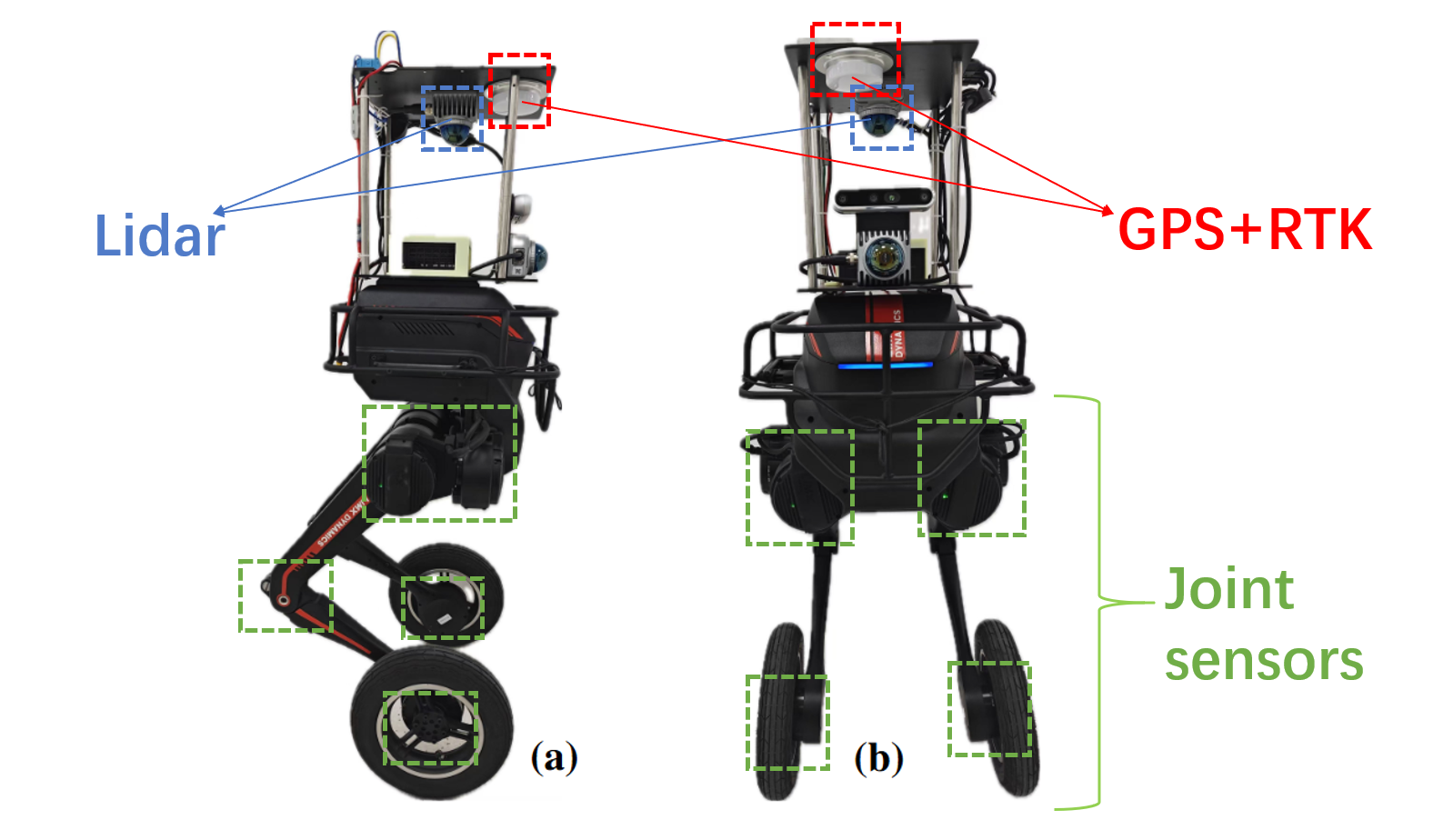

The legged-wheel robot we used to collect datasets is shown in Fig. 1. It’s equipped with 2 MID360 LiDAR, a BG-610M RTK GNSS, and a Realsense d435i RGB-D camera. Note that our datasets only include the LiDAR and IMU data from 2 MID360 LiDAR, the GPS-RTK data from RTK GNSS module and joints sensors data from the robot’s API. Data from d435i is not in the datasets.

LiDAR1(top-mounted, upside-down):This LiDAR is mounted upside-down on the top of the robot. Its z-axis points downward, x-axis points forward along the robot’s heading direction, and the y-axis is determined by the right-hand rule (pointing to the robot’s left). It outputs 10 Hz LiDAR point cloud( topic: /livox/lidar_10_192_1_141) and 200 Hz IMU data( topic: /livox/imu_10_192_1_141).

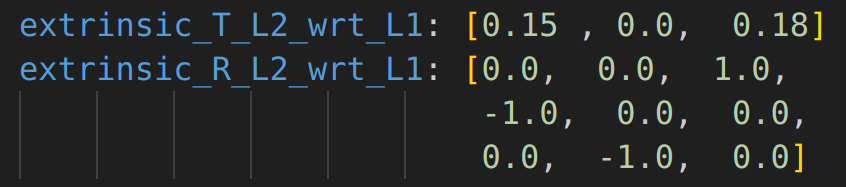

LiDAR2(front-mounted): This LiDAR is mounted on the front of the robot. Its pose relative to LiDAR1 is given by the extrinsic transformation as shown in Fig. 2. It outputs 10 Hz LiDAR point cloud( topic: /livox/lidar_10_192_1_123) and 200 Hz IMU data( topic: /livox/imu_10_192_1_123).

The GNSS system has a centimeter-level localization ability and provides the vehicle’s ground truth pose in the Universal Transverse Mercator (UTM) coordinate system, at a frequency of 10 Hz.

The joint sensors output the joint position at a high frequency of 500 Hz( topic: /joint_states).

We collect the dataset in different challenging scenes in the campus of Shanghai Jiao Tong University. During the data collection process, the average robot speed is under 1m/s. In particular:

This dataset is licensed under the Creative Commons Attribution 4.0 International License (CC BY 4.0).